Optical Issues In These Digital Days

It goes without saying that digital has changed lots of things in photography.

One matter that requires more thorough investigation is how it affects optics

and assumptions we have made about the design, recommendations, and even the

naming conventions we use. While this column length does not allow for full

discussion I'll raise some of the issues and open the floor to our writers

and readers to investigate and discuss these matters further.

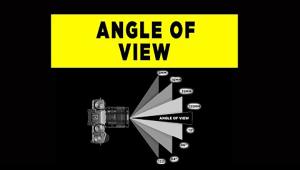

Lenses for D-SLRs, even those called "digital only," are still marked

in the 35mm format fashion, which means that we have to multiply by the ratio

of the sensor in question to the 35mm frame size to make sense of it all. That

could be 1.5, 1.6, or more for digicams. This way of expressing the lens'

effect on our images, and its inferred angle of view, might make some think

they have a very wide angle lens if they mount a 24mm lens. The multiplication

factor is a transitional way of expressing things. Is there a better way of

expressing this?

Lenses for D-SLRs, even those called "digital only," are still marked

in the 35mm format fashion, which means that we have to multiply by the ratio

of the sensor in question to the 35mm frame size to make sense of it all. That

could be 1.5, 1.6, or more for digicams. This way of expressing the lens'

effect on our images, and its inferred angle of view, might make some think

they have a very wide angle lens if they mount a 24mm lens. The multiplication

factor is a transitional way of expressing things. Is there a better way of

expressing this?

Given that stated focal lengths have to be adapted to the format to understand their effect, how does that change depth of field calculations? It does have an affect, with greater depth of field at wider apertures than the "norm," at least in relation to assumed calculations with lenses on 35mm cameras. Does that mean that we can start using the "sweet spot" on the lens more and not stop down as much as we learned to get deeper depth of field effects?

Flare factor and sharpness: Yes, there is such a thing as the "flare factor" and it's the difference between scene brightness range and the brightness range of the recorded image. The ideal is 1:1. Flare hurts the contrast and vividness of an image. Yet, if you look at a direct, raw image it's pretty flat, and image processing has a lot to do with enhancing contrast. Given that digital images are inherently "flat," just how much does the image contrast a lens delivers depend on algorithms and how much on multi-coating and other corrections?

Similarly, sharpness is also enhanced with image processing, which means that resolution tests (and I mean resolution in terms of resolving line pairs) can no longer be strictly a matter of mounting a given lens on one body. In other words, the resolution of the lens might be variable according to the image processor to which the recorded image is fed. It has been a given that digital resolves better than film, but is that superiority based on sophisticated number crunching more than what the lens itself can or cannot deliver? Or, more bluntly put, is digital's claimed superior resolution based pretty much on image processing?

Shot steadiness used to depend on the 1/focal length rule, but with VR (or IS or whatever) the rules changed and two to even four stop advantages have been verified. So, do we throw out that "old" rule?

The point is that you cannot separate digital imaging from image processing. And now you can't separate optics from processing power. What happens when light strikes a digital sensor inside the camera has changed all our assumptions about what we could and could not do with an image; now we should begin to consider our assumptions about how we relate to the glass on the front of the camera as well.

Lenses for D-SLRs, even those called "digital only," are still marked

in the 35mm format fashion, which means that we have to multiply by the ratio

of the sensor in question to the 35mm frame size to make sense of it all. That

could be 1.5, 1.6, or more for digicams. This way of expressing the lens'

effect on our images, and its inferred angle of view, might make some think

they have a very wide angle lens if they mount a 24mm lens. The multiplication

factor is a transitional way of expressing things. Is there a better way of

expressing this?

Lenses for D-SLRs, even those called "digital only," are still marked

in the 35mm format fashion, which means that we have to multiply by the ratio

of the sensor in question to the 35mm frame size to make sense of it all. That

could be 1.5, 1.6, or more for digicams. This way of expressing the lens'

effect on our images, and its inferred angle of view, might make some think

they have a very wide angle lens if they mount a 24mm lens. The multiplication

factor is a transitional way of expressing things. Is there a better way of

expressing this?Given that stated focal lengths have to be adapted to the format to understand their effect, how does that change depth of field calculations? It does have an affect, with greater depth of field at wider apertures than the "norm," at least in relation to assumed calculations with lenses on 35mm cameras. Does that mean that we can start using the "sweet spot" on the lens more and not stop down as much as we learned to get deeper depth of field effects?

Flare factor and sharpness: Yes, there is such a thing as the "flare factor" and it's the difference between scene brightness range and the brightness range of the recorded image. The ideal is 1:1. Flare hurts the contrast and vividness of an image. Yet, if you look at a direct, raw image it's pretty flat, and image processing has a lot to do with enhancing contrast. Given that digital images are inherently "flat," just how much does the image contrast a lens delivers depend on algorithms and how much on multi-coating and other corrections?

Similarly, sharpness is also enhanced with image processing, which means that resolution tests (and I mean resolution in terms of resolving line pairs) can no longer be strictly a matter of mounting a given lens on one body. In other words, the resolution of the lens might be variable according to the image processor to which the recorded image is fed. It has been a given that digital resolves better than film, but is that superiority based on sophisticated number crunching more than what the lens itself can or cannot deliver? Or, more bluntly put, is digital's claimed superior resolution based pretty much on image processing?

Shot steadiness used to depend on the 1/focal length rule, but with VR (or IS or whatever) the rules changed and two to even four stop advantages have been verified. So, do we throw out that "old" rule?

The point is that you cannot separate digital imaging from image processing. And now you can't separate optics from processing power. What happens when light strikes a digital sensor inside the camera has changed all our assumptions about what we could and could not do with an image; now we should begin to consider our assumptions about how we relate to the glass on the front of the camera as well.